Rightly or wrongly, publications are a critical part of researchers’ career development. It’s still the case in most disciplines and countries that a “good” publication will make your career. And it’s not long ago that, if you wanted to make sure people found read and applied your work, publishing it - whether in a relevant journal, or as a monograph or book - was enough. But in an age of information overload – and of growing metricisation - this is no longer the case. Publishers provide lots of important services but typically don’t have the capacity to undertake targeted marketing around each individual piece of work they publish. This means that much research is scarcely, if ever, downloaded or read, much less applied or cited. This in turn means that researchers need to take action themselves if they want to ensure their work finds its audience.

Around the time we started Kudos, we undertook a survey of around 4,000 researchers to determine, among other things, who they felt provided them with support for increasing the visibility of their work. When asked whether their institution provided them with such support, only about 50% of respondents gave a positive response. When we’ve explored this point in interviews with researchers and institutional staff since then, it’s clear that while institutional support does exist, it’s not always clear who provides it - making it hard for researchers to know where to turn, and indeed for institutional staff to be able to know about each others’ efforts, let along align and build on them.

This is the backdrop to a workshop that we led last week at the 2016 conference of ARMA (the Association of Research Manager and Administrators) (slides). Building on that last point, we started with an exercise where we explored the different teams / offices involved in increasing the visibility of research outputs, the range of names by which they are commonly known, and the intersections between them (which we didn’t explore in detail during the workshop but which I’ve attempted to represent in my diagram!). The number of sticky notes we ended up with - and the complexity of this diagram - is a pretty good indication of why researchers struggle to know where to turn for support.

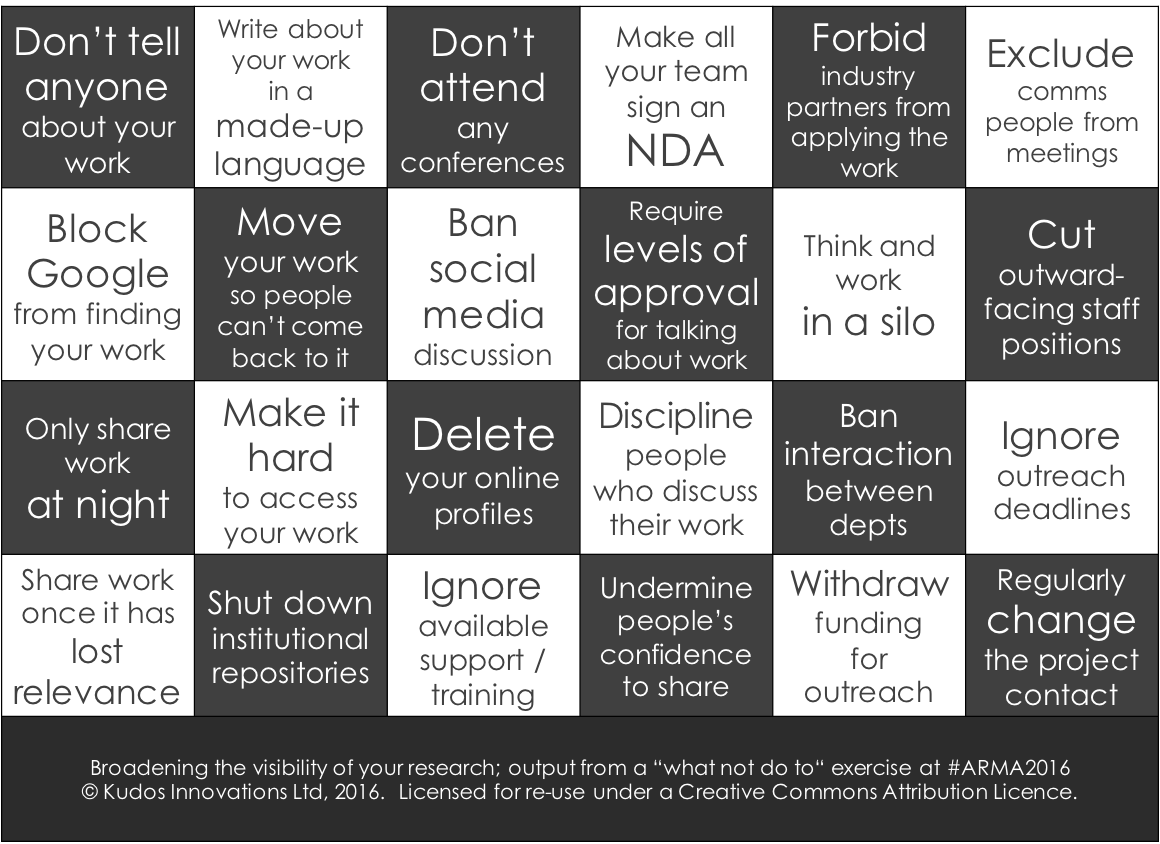

Following this initial foray into roles and responsibilities, we began to explore possible tools and techniques for how researchers and institutional staff might work together to increase visibility of research outputs. In order not to let our thinking be hampered too much by real-world experiences and constraints, we started this exercise in reverse: instead of asking ourselves (for example) “how can we maximize the audience for our research”, our breakout groups brainstormed how they would achieve the following three goals:

- make sure no-one can find, read, apply, benefit from your institution’s research

- minimize any chance of researchers participating in efforts to increase the reach and impact of their work

- make it difficult for institutional staff to collaborate in efforts to broaden reach and impact

The crazy ideas that came out of those discussions have been blended in the following graphic which I share for comedy value but also because it is the "dark side" of the "bright side" featured at the end of the post:

Open "what not to do" as a PDF

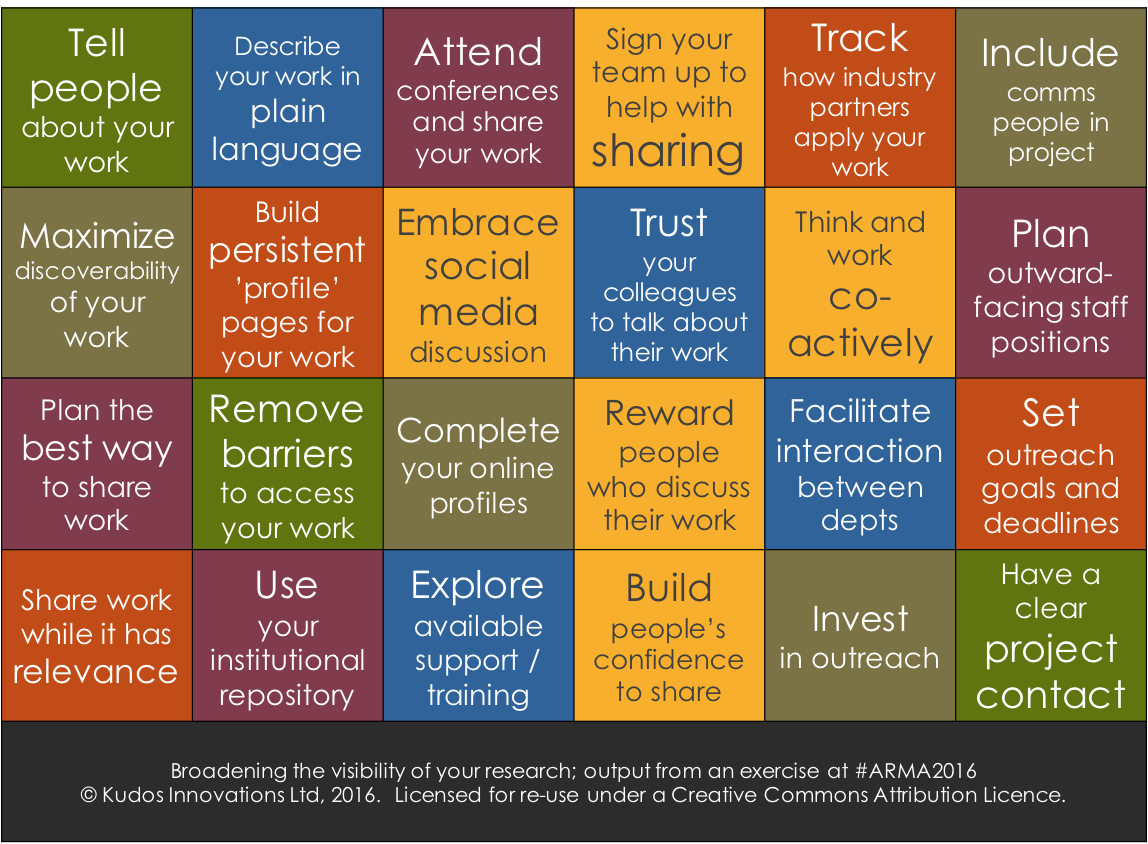

The final part of the workshop involved “flipping” - picking some of the most thought-provoking ideas from those above and considering what the opposite might be, and then brainstorming a little more about practical ways to implement those ideas. We didn’t have time to “flip” all the ideas but I carried on playing the game on the way home so was able to complete the “flipped” table, below, which could be a nice graphic to use as the basis for further discussion with your colleagues - I’ve licensed it (and all these graphics) under CC-BY for that purpose. Here is a summary of the final ideas from the session:

- Sign your team up to help with sharing

- Flipping the NDA idea - what about “disclosure agreements” to commit members of the project team to support the dissemination of their research outputs?

- One participant described the sandpit workshops that her institution uses to brainstorm ideas and potentially win funding to support them - what about introducing a sandpit workshop with a focus on ideas for broadening the visibility and impact of work?

- Reward people who discuss their work

- Could a framework be created to give researchers points for good communications (one participant described an institution that allows researchers to accrue points and “buy themselves out of teaching”!)

- What about tools for encouraging, supporting, measuring and rewarding use of social media?

- Bit.ly (which generates trackable URLs) and Altmetric.com (which tracks the conversation around a publication) were both mentioned in this context and I noted how at Kudos we put the two together - enabling researchers to generate their trackable links in a system which then maps that activity against both Altmetrics and other data such as citations or downloads; Kudos also enables affiliated organizations (such as the researcher’s institution) to learn from and provide support when such links are shared

- Think and work co-actively

- Speed dating sessions could help to broaden visibility of work whether within the institution (across departments) or potentially with representatives from the media, citizen scientists, etc

- We also talked about initiatives such as centres for local engagement or for facilitating more productive relationships with industry

- Build people’s confidence to share

- Mentoring schemes and confidence coaching could be introduced to give people confidence to communicate around their work (we particularly discussed how post-graduate and early career researchers benefit from regular sessions with external leaders - and one participant mentioned “The art of being brilliant” sessions)

- There are lots of sources of tips for keeping explanations of your work simple (our own series on this topic includes some links) - and what about bringing in professional / interdisciplinary support for writing about research for different audiences?

- Measure, refine, celebrate

- We touched on the importance of having clear objectives for any of these activities, and mechanisms for measuring and capturing evidence of success - the LSE Impact Blog and the excellent guidance it gives was mentioned in this context, and I like to point to Melissa Terras’ repository project as an example of a relatively simple but useful approach to evaluating outreach activities.

Many thanks to all who participated in the workshop and to the excellent #ARMA2016 conference for the facilities.